For years, Windows users have quietly benefited from performance optimizations baked deep into the operating system—features most people never see, but definitely feel. Now, Linux is finally getting one of those behind-the-scenes upgrades, and it could make a noticeable difference in how efficiently CPUs are used.

This shift comes at an interesting time, as Microsoft itself has been busy rolling out fresh performance improvements across Windows 10, Windows 11, and Windows Server.

Microsoft's Recent Push for Faster Windows Performance

Recently, Microsoft announced two major performance-focused enhancements.

The first was a new native feature designed specifically for NVMe SSDs. In certain workloads, this change can dramatically improve throughput—by as much as 80 percent in some scenarios. The second improvement targets encryption: hardware-accelerated BitLocker, which significantly reduces CPU overhead compared to the traditional software-based approach.

Together, these updates highlight something Microsoft has been refining for years—how Windows squeezes more performance out of modern hardware without requiring user intervention.

Why Windows Schedules CPU Work So Efficiently

One of Windows' biggest strengths lies in how it schedules workloads across CPU cores and threads. Both Windows 10 and 11 work closely with hardware drivers, such as chipset drivers from AMD or Intel, to ensure tasks are sent to the most appropriate cores at the right time.

This isn't just about spreading workloads evenly. It's about using CPU resources intelligently—taking cache layouts, core types, and real-time usage into account to avoid bottlenecks and wasted cycles.

Ryzen X3D: A Real-World Example of Smart Scheduling

A great illustration of this approach can be seen with AMD's Ryzen X3D processors. These CPUs use multiple chiplet dies (CCDs), some equipped with large amounts of 3D V-Cache.

On Windows 11, the operating system understands which CCD is best suited for specific workloads and assigns tasks accordingly. AMD's chipset drivers further enhance this behavior through optimizations like the 3D V-Cache Optimizer. Power users have even discovered unofficial tweaks that push performance a bit further.

All of this works because Windows is keenly aware of how cache is structured and how workloads interact with it.

What Is Cache Aware Scheduling?

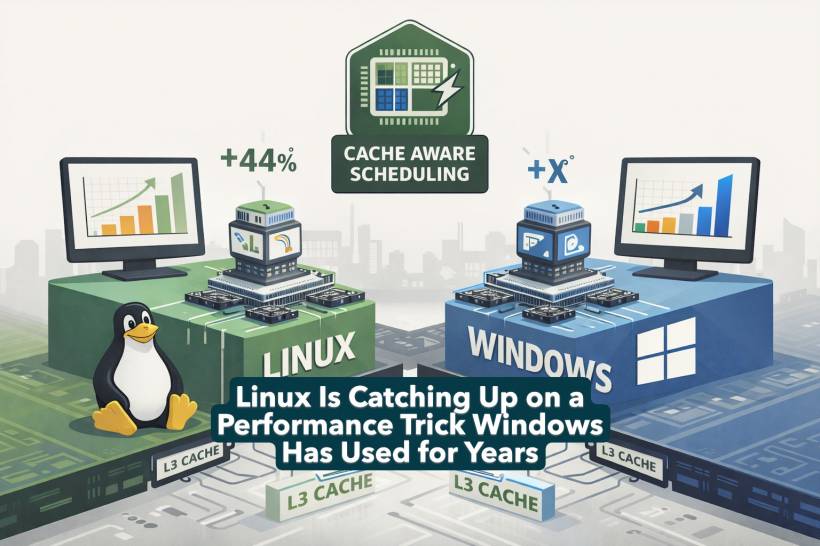

The underlying concept behind these optimizations is known as Cache Aware Scheduling, or CAS.

In simple terms, CAS allows the operating system's scheduler to understand how CPU cache is organized and how heavily it's being used at any given moment. The goal is straightforward: maximize cache hits while minimizing cache misses and unnecessary data movement between cores.

When workloads stay closer to the cache they depend on, performance improves and latency drops—especially in CPU-heavy or memory-sensitive tasks.

Linux Is Finally Adopting This Approach

Recent Linux kernel patches confirm that Cache Aware Scheduling is on its way to Linux. According to the patch notes, certain workloads see performance gains of up to 44 percent—an impressive improvement for a change that happens entirely under the hood.

For now, the implementation focuses on the primary last-level cache, typically the L3 cache on modern CPUs. Developers found that extending the same logic to secondary caches, such as system memory, didn't deliver meaningful benefits.

This careful, targeted rollout suggests the Linux kernel team is prioritizing real-world gains over theoretical optimizations.

Windows Has Had This for a Long Time—Quietly

What makes this development particularly interesting is that Windows has supported Cache Aware Scheduling for years. The feature has existed within the Windows kernel since Windows 10 and continues to be part of Windows 11 today.

Despite its importance, Microsoft has never publicly documented CAS in much detail. It's one of those foundational technologies that simply works in the background, improving performance without fanfare.

Why This Matters Going Forward

Linux adopting Cache Aware Scheduling is a big deal—not because Windows loses an advantage, but because Linux workloads stand to gain a significant efficiency boost, especially on modern multi-core CPUs with complex cache hierarchies.

As processors become more sophisticated, raw clock speed matters less than how intelligently an operating system manages resources. With CAS finally entering the Linux kernel, the performance gap in certain workloads may narrow even further—bringing Linux one step closer to parity with Windows in an area where Microsoft has quietly led for years.

Comments