In the world of IT and systems engineering, there's a golden rule that everyone learns early on: never experiment directly on your live environment. Changes should be tested, validated, broken, repaired, and verified long before they ever touch anything users depend on. That's why most organizations maintain multiple environments such as Development, Test, and Staging before rolling anything into Production. It is a time-tested safety net designed to protect reliability.

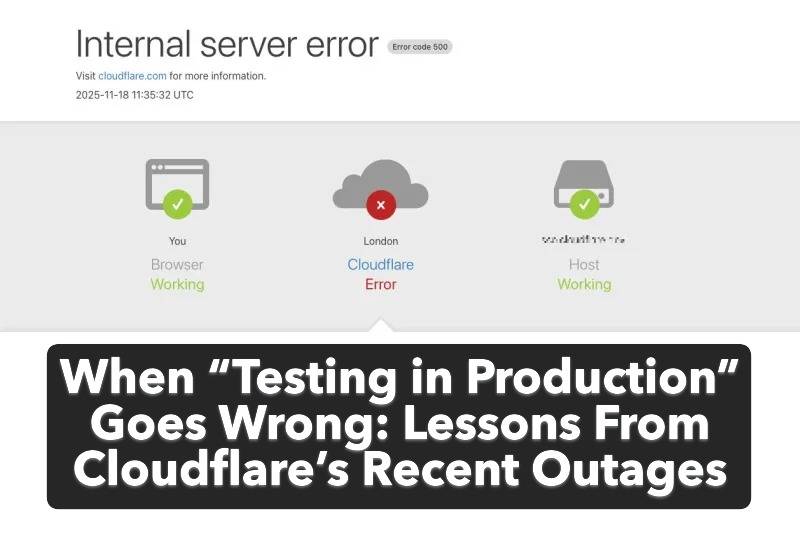

But recent incidents involving Cloudflare—a company widely trusted to keep huge portions of the internet online—have sparked an uncomfortable question: what happens when even industry giants start bending that rule?

The Ideal World vs. Reality

In an ideal setup, Development environments are where fresh ideas are born and break frequently. Testing is where those ideas start shaping into something usable. Staging is where systems mimic real-world production behavior without the risk. Only when everything checks out does anything reach Production.

Most organizations swear by this. Yet Cloudflare's recent outages suggest that even highly sophisticated players sometimes shortcut the process in favor of speed. And when that happens, the ripple effect isn't small—because Cloudflare is the backbone for countless websites and services worldwide.

What Actually Happened?

Cloudflare recently published a post-mortem describing an outage on December 5th linked to changes involving React Server Components (RSC). The goal was legitimate: address a critical vulnerability (CVE-2025-55182) by enabling a 1MB buffer. The problem? The rollout happened live on Production.

During deployment, Cloudflare discovered that one of their testing tools couldn't handle the new buffer size. Instead of halting and reevaluating, they chose to disable the tool globally. This decision effectively bypassed gradual rollout controls—the very safeguards meant to prevent widespread failure.

And then things cascaded.

The change triggered unexpected behavior in their older Lua-based FL1 proxy. A NIL value popped up where it shouldn't have existed, leading to HTTP 500 errors and service interruptions. In simple terms: users started seeing failures because Production became the testing ground.

Not the First Time

This wasn't an isolated mishap. Not long before, Cloudflare had already dealt with another headache involving their Rust-based FL2 proxy. A corrupted input file caused the proxy to crash, and it took significantly longer to diagnose and fix.

So this new incident wasn't just a fluke. It highlighted a worrying pattern: critical components being exposed to real-world conditions before they were fully validated.

Why This Matters More Than Just One Outage

Cloudflare isn't a small startup experimenting for the first time. They're deeply embedded into global internet infrastructure. When Cloudflare breaks, the internet feels it.

More importantly, their situation should serve as a warning for everyone working in IT, DevOps, networking, and software engineering. Skipping structured testing may save an hour today, but it can cost days of chaos later. Many engineers have experienced environments where "Staging" exists only on paper and "real testing" quietly happens in Production. It may feel efficient—until something fails at scale and support teams drown in complaints.

The Bigger Lesson

Every outage offers a learning opportunity. In this case, the takeaway isn't just about Cloudflare. It's about discipline in engineering culture. Testing environments exist for a reason. Gradual rollouts exist for a reason. And no organization—no matter how technically advanced—can afford complacency.

Cloudflare did eventually resolve the issue quickly. But the pattern of issues points to a deeper need for better validation processes and stronger guardrails before deployments hit live users.

Because once Production catches fire, no management directive, clever workaround, or emergency memo can undo the damage fast enough.

Comments